IJCRR - 2nd Wave of COVID-19: Role of Social Awareness, Health and Technology Sector, June, 2021

Pages: 115-122

Date of Publication: 11-Jun-2021

Print Article

Download XML Download PDF

AI-based COVID-19 Airport Preventive Measures (AI-CAPM)

Author: Vergin RS, Anbarasi LJ, Rukmani P, Sruti V, Ram G, Shaumaya O, Nithesh Gurudaas

Category: Healthcare

Abstract:Introduction: Corona has affected everyone's lives. Most companies and services have found new ways to approach their work while at the same time preventing the further spread of the virus. The mode of travel has also significantly changed. Governments are trying their best to maintain all possible safety norms at airports and railway stations. The idea is to ensure that people are maintaining social distancing and wearing a mask. Objectives: The main aim of the proposed work (AI-CAPM) is to reduce the contact between staff and the passengers. To ensure the genuineness of the Aadhar card and other IDs, the system uses face detection algorithms that will detect the faces and only allow those faces who have their tickets booked for that particular day. Methods: The proposed work uses Local Binary Patterns Histograms (LBPH) Face Recognizer for face recognition, Social Distancing Monitor Using DNN and Mask Detection with CNN. Results: This research work builds a social distancing monitor model using Single Shot Detector (SSD). SSD takes one single shot to detect multiple objects within the image. SSD covers all computation in a single network by eliminating subsequent pixel or feature resampling stages and object proposal generation. This makes SSD easy to train and simple to integrate into systems that require detection component. SSD is faster and has much better accuracy compared to other algorithms. Conclusion: The software developed in this work proposes an autonomous verification of a passenger as it displays the passenger's train/flight details and boarding area by recognizing them from the passenger's database. This removes the need for manual checking of the passenger before boarding. Overall, the software developed automates tasks and reduces human involvement as it is the need in this pandemic struck world to contain the spread of the virus.

Keywords: COVID-19, Safety measures at the airport, Face detection/authentication, Mask detection, Social distancing

Full Text:

Introduction

The entire world has come to a standstill because of the Covid-19 pandemic. The spread of the disease is increasing day by day and has shaken the stability of the entire world. The whole pharmaceutical industry is striving hard to produce a vaccine on one side, and the medical experts are trying their best to bring down the death rate on the other. Prevention is always a better solution for any situation, so social distancing and wearing masks have become an absolute priority. The spreading of the covid-19 virus is mainly because of the droplets that a carrier expels and this flies through the air until gravity pulls them down. The maximum distance those droplets can fly is about six feet. Masks work as an effective barrier against the droplets and protect the wearer as well as the people around.1,2

The irresponsible members of the community are those who don’t wear the mask and roam outside thus increasing the risk of transmission. Humans expel aerosol particles, which are about 1/100th the size of human hair, and are more difficult to defend against. Social distancing and staying outdoors, where there is more airflow will be the remedial solution for this kind of transmission. About 30% of infections are caused by asymptotic people who don’t have any symptoms of COVID-19 but if they are irresponsible and don’t follow the social distancing norms and don’t wear masks then they would be the main reason behind spreading the disease. It has been found out that the risk of transmission of the virus is reduced to about 65% of people wear masks and 90% of people follow the social distancing norms.

The research work focuses on reducing the contact between the staff members working at the airport & the passengers and ensuring that all the passengers follow social distancing norms and wear masks. The installed cameras at the airport will be used to detect people not obeying the social distancing norms or wearing masks and the installed speakers will be used to announce.3,4 The staff members working at the entry gate of the airport who see the passengers tickets and other documents and then allow them to enter the airport can be replaced by the face detection/authentication model where the passengers face images and their details which were registered at the time of booking the tickets will be stored in the airport database and this data would be utilized when for the authentication of the passengers through the installed cameras at the entry gate of the airport. Application of artificial intelligence and computational intelligence techniques is diversified in various area such as e-healthcare smart city and smart grid9, data processing10, predictive maintenance.11 Likewise, the application of AI-based techniques plays a vital role in this research work. The proposed research work uses LBPH which labels the pixels of an image by thresholding the neighbourhood of each pixel and considers the result as a binary number.5,6

In today’s world which has been widely affected by the COVID-19 people must wear masks to prevent the spread of the novel-corona virus. It is a necessity to monitor people in public places and ensure that they are wearing masks and following social distancing norms. The research work is based on a deep learning model developed using Keras that helps to classify people without masks from live camera feed and instruct them to wear masks as soon as possible. This model can be deployed in the CCTV cameras of the airports and railway stations to continuously monitor people remotely without manual checking and hence reducing human involvement also. Then the speakers can be used to call out the people violating the norms and ask them to wear masks.7,8

This research work also intends to build a social distancing monitor model using which the security officials can just look at the screen displaying the video footage of the airport instead of being physically present everywhere, whether people are maintaining social distancing norms or not, and for those who are not following the safety norms, the speaker automatically announces “Please Maintain Social Distancing” and based on facial recognition, it can extract information about the concerned person and store it as a violation under their ID and then later they can be fined during boarding of flight. To develop this Single Shot Detection Algorithm has been used for Object Detection, MobileNet for Image Classification and MobileNet pre-trained-model files that were trained in the Caffe-SSD framework.

The Social Distancing Monitor Model uses Single Shot Detector for Object Detection, SSD takes one single shot to detect multiple objects within the image. SSD covers all computation in a single network by eliminating subsequent pixel or feature resampling stages and object proposal generation. This makes SSD easy to train and simple to integrate into systems that require detection component. SSD is faster and has much better accuracy compared to other algorithms. Other algorithms which could have been used for object detection are Faster RCNN, Yolo.9,10

Literature Survey

The research work explains in detail the MobileNetV2 SSD layer,12 its architecture, the image it has been trained on and how it can be applied in deep learning models using Transfer Learning. The research work explains the different architecture of CNN and how to implement them to solve a deep learning problem. This research paper has been used to know what MobileNet is and how it is used in embedded vision applications, and how it work’s and what is its structure, it has also been used to know how MobileNet is better than the other models for ImageNet classification, and how it is effective across a wide range of applications like object detection.13,14

The authors explained the scope of facial recognition based on depth learning. It highlights the immense usage of facial recognition in the field of biometrics. Researchers in16 explains a system that performs facial recognition/authentication from a live video feed. Challenges of detecting and authenticating people with similar facial characteristics or identifying people who are identical twins are addressed. The work in17 proposes an algorithm for face detection and recognition based upon CNN where student’s attendance is taken using facial recognition. Using an IoT and edge-based computing approach, the generated data of the facial recognition of the students are computed and then transmitted. This system gives an accuracy of about 97.8%.15

The research work has been used to know how SSD can be used to detect objects in an image, and how it works by generating scores for the presence of an object and drawing a box around the shape of the object, this paper has also been used to know how SSD is easy to train and integrate into systems, and how it is much faster and has greater accuracy than the other models. The work has been used to learn about the MS-COCO dataset, what all images it comprises of, how it is more accurate than the other datasets for object detection and classification, and how it surpasses other datasets like PASCAL, SUN by giving a detailed mathematical analysis of the database and analysis of the basic performance of the box that combines the results of component discovery.16-19

Proposed Work

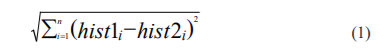

Face detection

LBPH is used for face detection and it uses parameters such as Grid X which are the number of cells in the horizontal direction, Grid Y which are the number of cells in the vertical direction and radius which is used to make the circular local binary pattern and represents the radius around the central pixel and is usually set to 1. Sample images of the passengers are taken while they are booking their flight tickets. Histograms of the sample images are stored in the airport database along with an id number which can be the aadhar card number. When these passengers go to the airport, the camera takes an input image. This input image’s histogram is compared with the sample images histogram from the airport database and the output displayed are the details such as the passenger’s name, age, gender, flight details from the image with the closest histogram. If the passenger’s details aren’t there in the airport database their face would be identified as UNKOWN and they wouldn’t be allowed to go inside the airport. Id number or the aadhar card number is used to uniquely identify the samples images and the details of each passenger. Euclidean distance20 is used to compare any two histograms (hist1 and hist2) are given in equation 1. The overall flow of this work is given in figure 1.7,8

Algorithm

(While booking the flight tickets)

Step 1: Enter aadhar card number, name, age, gender, flight details etc.

Step 2: Dataset consisting of 200 samples of face images are taken.

Step 3: The images are converted to grayscale format and represented as 3x3 matrices.

Step 4: Central value of the matrix is used as the threshold value.

Step 5: For each neighbour of the central value of matrix, if values are =>than the threshold, set 1 else set 0.

Step 6: Each binary value from each position from the matrix are taken line by line and concatenated to form a new binary value.

Step 7: Binary value is converted to a decimal value and the central value of the matrix is replaced by this decimal value.

Step 8: The image is divided into multiple grids by using Grid X and Grid Y.

Step 9: From each grid, there is a histogram that contains occurrences of each pixel intensity i.e. occurrences of the intensity value from 0-255.

Step 10: Each histogram is concatenated to form a bigger histogram.

Step 11: Repeat Step 3,4,5,6,7,8,9 & 10 for all the 200 samples.

Step 12: The obtained images along with details are stored in the airport database.

(At the airport)

Step 13: Show the face to the camera at the airport entrance.

Step 14: Algorithm trained creates histogram for the input image by doing step 3 to 10.

Step 15: The above face is matched with the histograms in the airport database.

Step 16: If the input image has a histogram matching with any of the histogram of the images of the airport database, display details of the passenger. Else display Unkown.

Dataset

The passengers have to face the camera of their mobile phones or laptop while booking the tickets and around 200 image samples will be collected. All the details of the passengers along with their face images will be stored in the airport database. The passengers entering the details (figure 2) while booking the ticket. (Here id is entered as 1 by the passenger but in real time this id would be the aadhar card number which uniquely identifies each citizen of India). The database collected, stored and trained are shown in figure 3(a), 3(b) and 4. 9,10,11

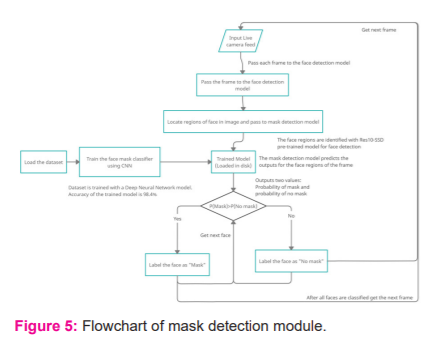

Mask Detection

The proposed work tries to identify all the people in the image or live feed and distinguishes them based on the differences in the Regions of Interests on their face with a CNN(Convolutional Neural Network) Model and classifies them as wearing masks or not. The regions of interest are the Mouth and Nose region as they differ for people with masks and without masks. By training a Deep Learning Model, the weight scores can be estimated for the network architecture to classify people as wearing Masks or not by identifying the difference in the regions on the face. The overall architecture of the proposed flow is shown in figure 5 and the database created with and without the mask is given in figure 6(a) and 6(b).

Algorithm

Step 1: Load the training dataset images

Step 2: Train the developed neural network architecture over the training dataset with masked and non-masked inputs and obtain the accuracy metrics

Step 3: The neural network locates the regions of interest in the face – nose, mouth which would differ for masked and non-masked images and adjusts the weights of each neuron to maximize the accuracy.

Step 4: Deploy the model in a camera to monitor the live feed.

Step 5: The camera captures each frame of the feed and passes it to the model.

Step 6: The model first recognizes the faces in each frame with the pre-trained res10 Caffe architecture and passes the coordinates of the face to the mask detector.

Step 7: The mask detector model receives the coordinates of the faces and calculates the probability of whether the person is wearing a mask or not and labels him as safe or unsafe

Step 8: Then the camera feed passes the next frame and checks the same repeatedly.

Dataset

The dataset consists of 700 people wearing masks and 700 people without masks for training the model.

Deep Learning Model

The input image is passed to Google’s MobileNetV2 CNN Architecture. Transfer Learning is a deep learning algorithm where the weights of a model trained for a similar purpose is used in another model. Here the pre-trained weights of the MobileNet V2 Model are used. Mobile Net V2 is a pre-trained Neural Network architecture released by Google. It is a CNN Model that has 53 layers and trained on over million images on ImageNet. The MobileNet V2 takes a 224*224* dimension image as input gives the tensor of the last convolutional layer block of the model, which represents the scores for the input image. The MobileNet layer’s output is passed on to a set of custom neural network layers using Keras - Flatten, Dense, Dropout and finally to the output layer with 2 units - one indicating the probability of wearing the mask and another for not wearing the mask) with softmax activation. Other Libraries that can be used instead are: Pytorch and Densenet.12-16

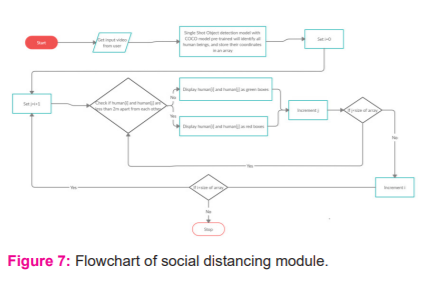

Social Distancing Algorithm

The social distancing detection phase is explained in Figure 7. It is further described in the following steps.

Step 1: Camera records video/get live video.

Step 2. The Social Distancing Monitor Model gets each frame from the video and processes it.

Step 3. It then detects all objects present in the frame.

Step 4. It will then check which of the objects qualify as human beings, and sketch a bounding box around all human beings.

Step 5. Now, it will calculate the distance between the centroid of all the bounding boxes and determine which of the boxes violate the distancing norms.

Step 6. Whenever the camera notices red bounding boxes i.e they are not maintaining social distancing norms, it automatically plays the audio of “Please Maintain Social Distancing”

and reminds all to maintain distance or even the officer can speak on the mike as the mike gets switched on whenever there is a violation for the officer to speak.

Step 7. Furthermore, based on facial recognition, it can extract information about the concerned person and store it as a violation under their ID and then fine him or her during boarding.

Dataset

A pre-trained MobileNet model has been used in the Social Distancing Monitor Model that has been trained in the Caffe-SSD framework and can accommodate up to 20 classes. This model has been first trained using the MS-COCO dataset and then optimized in VOC0712. Except for MS-COCO recreation, it can only get mAP = 0.68. After pre-receiving training mAP = 0.727. There are 2 key points in this model - Here the ReLU6 layer is replaced by ReLU & [(0.2, 1.0), (0.2, 2.0), (0.2, 0.5)] are set as the anchors for the conv11_mbox_prior layer. MS-COCO DATASET - This database contains images that can be easily detected by a 4-year-old, with images of 91 objects. These Objects are labelled using the division of each model which assists in the construction of an accurate object. VOCO712 DATASET - This database includes a total of 11540 images, each of which contains a set of objects, out of 20 different categories, making a total of 27450 objects defined.15-17

Deep Learning Model for Detecting People

Caffe is a Deep Learning library that is well suited for visual and predictive applications. Caffe can create a net with sophisticated configuration options and can access pre-networked networks in the online community. Caffe allows the user to set hyper net limits. Computational costs for various services are optimized. Caffe also supports the use of GPUs as it is built using CUDA.

Detecting people in a video file and calculating distance between people

The Social Distancing Monitor Model uses OpenCV in Python and have used Caffe model files to detect a person. The Caffe model being used is a very generic model which can detect almost all objects in a frame. So, it takes a for loop to check each object detected by the detector, it first checks the confidence value of the object detected which is the probability that an anchor box contains an object, if it is above the threshold value, it takes the index of the object and then check if that index of the class of objects is a “person” or not if it is a person it takes the coordinates of the object and then makes a rectangle around the object.

The distance can be calculated using the coordinates of the bounding box of the detected person. If the distance is less than a predefined threshold value, then it shows red and starts playing the audio “Please Maintain Social Distancing” which means persons are close to each other. If the distance is greater than the threshold value, then it shows green. So previously it had stored all the persons detected along with their ID and bounding box in an array then it will create another array of centroid_dict where it would store the object ID along with its centroid of the bounding box, then it makes use of combinations header file in python to create combination among all the value of centroid_dict so for each combination it will calculate distance between them using their centroid value and see if it is within the threshold value or not.18,19

Results and Discussion

Figure 8 shows how the website grants control to the staff members to check for Mask detection, Face detection & Authentication and ensure Social Distancing.

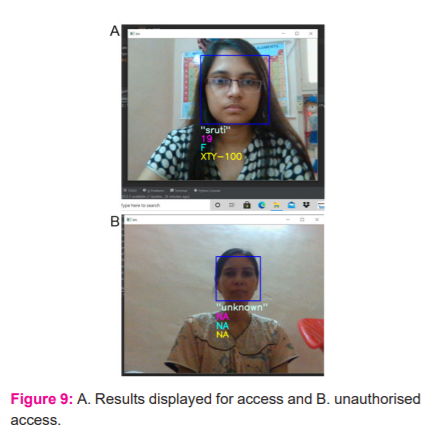

Face Detection

The face is available in the airport dataset, so all details are displayed and this passenger would be allowed to enter the airport and a sample is shown in Figure 9A. Figure 9B detects the face that is not available in the airport dataset, so shows Unknown and this passenger won’t be allowed to enter the airport.

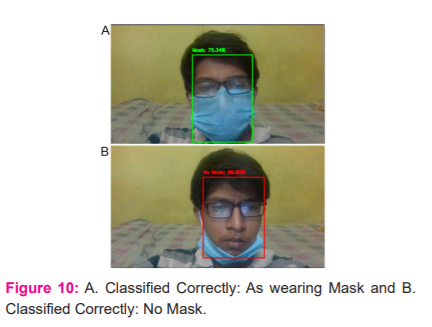

Mask Detection

The model is trained for 20 epochs with a batch size of 32 for testing its performance. The accuracy obtained was 98.4% for 20 epochs. The result is two probability values – One for With Mask and one for without Mask. The model predicts the output based on which value is higher. Results of wearing the mask and the No mask classification is shown in Figure 10.

Social Distancing

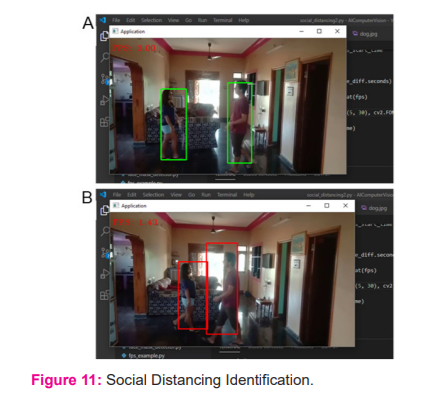

People are identified and rectangular boxes are drawn around them. As given in Fig 4.3.1, if they are at safe distance, they are displayed in green boxes and if they are not at a safe distance i.e., they are beyond a threshold, they will be displayed in red boxes and the audio says “MAINTAIN SOCIAL DISTANCING” starts. In a practical situation, this can be done by speakers installed at the airport system. Even the officer can himself speak on the mic to alert people to maintain a distance because the mike gets automatically switched on whenever someone violates the norm or are under red boxes. Here the threshold distance is 75 which is the Euclidean distance. Setting the threshold depends on the resolution, angled view, height of a person, etc. similarly social distancing identified using the proposed models is shown in Figure 11.

Conclusion

Deep Learning has been a massive influence in today’s computer vision-based applications and is a constantly growing field. In this paper, Deep Learning Models are used to help automate tasks in public places – Railway Station and Airports and reduce manual human work. With the COVID-19 lockdown ending and the world ready to move back to normal without a proper vaccine people must follow the safety protocols – Wearing a Mask and Maintaining Social Distancing to reduce the spread of the virus. The Deep Learning model developed – To detect mask and monitor social distancing when deployed can be used for remote monitoring of people in airports and railway station and ensure they follow the safety protocols. Facial Recognition is also another growing field in today’s authentication methods. The software developed proposes an autonomous verification of a passenger as it displays the passenger’s train/flight details and boarding area by recognizing them from the passenger’s database. This removes the need for manual checking of the passenger before boarding. Overall, the software developed automates tasks and reduces human involvement as it is the need in this pandemic struck world to contain the spread of the virus.

Acknowledgement: Authors acknowledge the immense help received from the scholars whose articles are cited and included in references of this manuscript. The authors are also grateful to authors/editors/publishers of all those articles, journals and books from where the literature for this article has been reviewed and discussed.

Conflict of Interest:

The Author(s) declare(s) that there is no conflict of interest.

Source of Funding: None

References:

-

Wong SY, Tan BH. Megatrends in infectious diseases: the next 10 to 15 years. Ann Acad Med. 2019;48(6):188-94.

-

Sekar SN, Anbarasi LJ, Dhanya D. An Efficient Distributed Compressive Sensing Framework For Reconstruction of Sparse Signals in Mechanical Systems. J Mech Engg Tech. 2018;9(13):1286–1292.

-

Senthil Kumar AP, Narendra M, Anbarasi LJ, Raj BE. Breast cancer Analysis and Detection in Histopathological Images using CNN Approach. In Proceedings of International Conference on Intelligent Computing, Information and Control Systems. 2021;335-34

-

Guan WJ, Ni ZY, Hu Y, Liang WH, Ou CQ, He JX, Liu L. Clinical characteristics of coronavirus disease 2019 in China. New Eng J Med. 2020;382(18):1708-20.

-

Chu DK, Akl EA, Duda S, Solo K, Yaacoub S, Hajizadeh A. Physical distancing, face masks, and eye protection to prevent person-to-person transmission of SARS-CoV-2 and COVID-19: a systematic review and meta-analysis. Lancet. 2020;395(10242):1973-87.

-

Leung CC, Lam TH, Cheng KK. Mass masking in the COVID-19 epidemic: people need guidance. Lancet. 2020;395(10228):945.

-

Sarobin MV, Alphonse S, Gupta M. Joshi T. Rapid Eye Movement Monitoring System Using Artificial Intelligence Techniques. In International Conference on Information Management & Machine Intelligence. Springer, Singapore. 2019;605-610.

-

Gondalia, A, Dixit D, Parashar S, Raghava V, Sengupta A, Sarobin VR. IoT-based healthcare monitoring system for war soldiers using machine learning. Proc Comp Sci. 2019;133:1005-1013.

-

Sarobin MV, Ganesan R. Swarm intelligence in wireless sensor networks: a survey. Int J Pure Appl Math. 2015;101(5):773-807.

-

Vasudevan S, Chauhan N, Sarobin V, Geetha S. Image-Based Recommendation Engine Using VGG Model. Adv Communi Compl Tech. 2021;23:257-265.

-

Chazhoor A, Mounika Y, Sarobin MV. Sanjana MV. Yasashvini R. Predictive Maintenance using Machine Learning Based Classification Models. In IOP Conference Series: Mat Sci Engg. 2020;95(1):012001.

-

Sandler M, Howard A, Zhu M, Zhmoginov A, Chen LC. Mobilenetv2: Inverted residuals and linear bottlenecks. In Proceedings of the IEEE conference on computer vision and pattern recognition 2018;4510-4520.

-

Ferreira A, Giraldi G. Convolutional Neural Network approaches to granite tiles classification. Expt Syst J Appl. 2017;84:1-5.

-

Howard AG, Zhu M, Chen B, Kalenichenko D, Wang W, Weyand T, Andreetto M, Adam H. Mobilenets: Efficient convolutional neural networks for mobile vision applications. arXiv preprint arXiv: 2017;2(7):62622-62633.

-

Han X, Du Q. Research on face recognition based on deep learning. In Sixth International Conference on Digital Information, Networking, and Wireless Communications (DINWC). 2018:53-58.

-

Gandhi M, Yokoe DS, Havlir DV. Asymptomatic transmission, the Achilles’ heel of current strategies to control Covid-19. N Engl J Med. 2020;382(22):2158-2160.

-

Khan MZ, Harous S, Hassan SU, Khan MU, Iqbal R, Mumtaz S. Deep unified model for face recognition based on convolution neural network and edge computing. IEEE 2019 May 23;7:72622-72633.

-

Liu W, Anguelov D, Erhan D, Szegedy C. Single shot multibox detector. In European conference on computer vision 2016 Oct 8;21-37.

-

Veit A, Matera T, Neumann L, Matas J, Belongie S. Coco-text: Dataset and benchmark for text detection and recognition in natural images. arXiv preprint arXiv. 2016;26: 361-364..

-

Sharon JJ, Anbarasi LJ. Diagnosis of DCM and HCM Heart Diseases Using Neural Network Function. Int J App Engg Res. 2018;13(10):8664-8668.

|

This work is licensed under a Creative Commons Attribution-NonCommercial 4.0 International License

This work is licensed under a Creative Commons Attribution-NonCommercial 4.0 International License