IJCRR - 13(8), April, 2021

Pages: 67-70

Date of Publication: 25-Apr-2021

Print Article

Download XML Download PDF

Students' Perception Regarding the Conventional First MBBS Practical Examination

Author: Veena Vidya Shankar, Komala N, Shailaja Shetty

Category: Healthcare

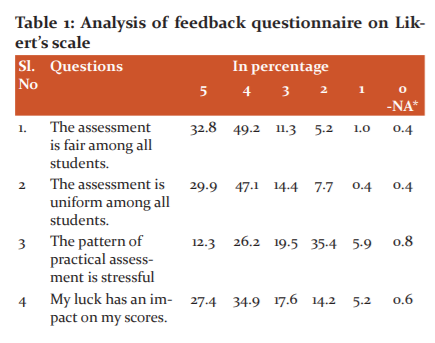

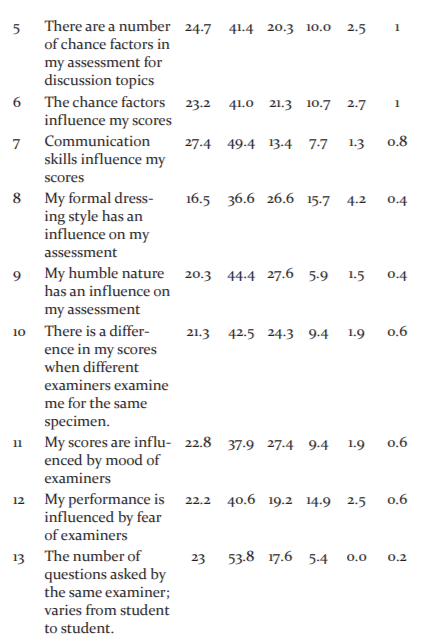

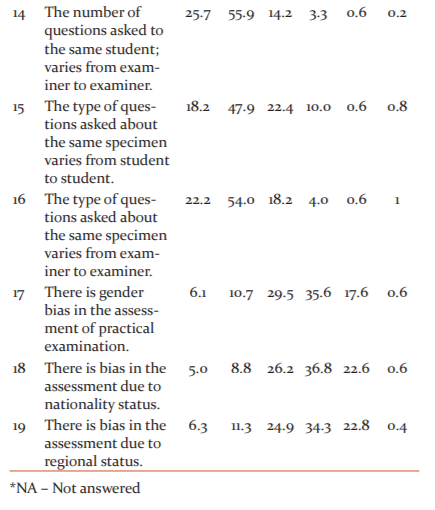

Abstract:Introduction: The assessment of MBBS phase I under Rajiv Gandhi University of Health Sciences(RGUHS), Karnataka, India has two components - theory and practical. The theory assessment is uniform among all students and the majority of the ques�tions are in the structured format to ensure objectivity. But this is not so in the case of the practical examination. Objectives: To evaluate the various factors influencing the MBBS phase I practical examination from the student perspective. Methods: The procedure and the intent of the feedback were explained to the students. The feedback of students regarding the University practical assessment was obtained by using a structured questionnaire. The questionnaire consisted of nineteen questions based on five points Likert's scale and two open-ended questions. The respondents' agreements/disagreements were noted. The feedback was taken from 478 undergraduate medical & dental students after the RGUHS practical examinations. Each question was analysed on Likert's scale and the percentages were tabulated. Results: The analysis of the questionnaire indicated that 82% & 77% of students have agreed that the assessment is fair & uniform respectively. 38.5% have felt the practical assessment to be stressful while 41.3% felt it was not stressful. Around 63% to 69% of students felt luck, chance factors and examiner related factors influenced their scores. Conclusion: The students have felt the traditional practical examination to be fair, uniform, with no bias to a certain extent, yet stressful with chance factors such as luck, communication skills and examiner related factors influencing their scores.

Keywords: Objective Structured Practical Examination, Viva voce, Competency, Assessment, Evaluation, Feedback

Full Text:

INTRODUCTION

The assessment of MBBS phase I under Rajiv Gandhi University of Health Sciences(RGUHS), Karnataka, India has two components - theory and practical. The theory assessment is uniform among all students and the majority of the questions are in the structured format to ensure objectivity. But this is not so in the case of the practical examination. A good practical evaluation has to include the criteria of objectivity, uniformity, validity, reliability & practicability.1

The Conventional practical examination as per the Rajiv Gandhi University of Health Sciences(RGUHS), Karnataka, India consists of spotters, surface marking, discussion of gross specimens and histology slides. Even though the spotters are conducted for different batches on different days, the assessment level is more or less uniform among the batches. However, a small element of subjectivity may be present. The surface marking can be made objective by using a proper checklist. The discussion of gross specimens and histology slides in the present scenario has a major subjective component. Hence, feedback was administered to the students to obtain their perceptions regarding the factors which influenced the practical examination. The feedback analysis obtained may throw light on the objectivity, validity and reliability of the practical examination. This study aims to evaluate the various factors influencing the MBBS phase I practical examination from the student perspective. This may serve as a crucial factor to enhance the standard of assessment in the educational program.2,3

MATERIALS AND METHODS:

The present study is a cross-sectional study, conducted for 3 years at M.S.Ramaiah Medical College, Bengaluru. The sample size was 120 first-year Medical and Dental undergraduate students per year who gave their consent to be a part of the study. The students who did not consent were excluded. The procedure and the intent of the feedback were explained to the students. The feedback of students regarding the University practical assessment was obtained by using a structured questionnaire. The questionnaire consisted of nineteen questions based on five points Likert’s scale and two open-ended questions. The respondents’ agreements/disagreements were noted. The feedback was taken from 478 undergraduate medical & dental students after the RGUHS practical examinations. Each question was analysed on Likert’s scale and the percentages were tabulated.

Statistical Analysis

The questionnaire was validated using methods like Face validation and Content validation.

The data was tabulated in a Microsoft Excel datasheet, frequencies and percentages have been calculated using the SPSS software.

RESULTS

Analysis of the questionnaire

82% & 77% of students have agreed that the assessment is fair &uniform respectively. 38.5% have felt the practical assessment to be stressful while 41.3% felt it was not stressful, however, 19.5% of them were unsure of it. Around 63% to 69% of students felt luck, chance factors and humble nature influenced their scores. 60% to 63% have indicated the examiner related factors influenced their scores (Table 1). On average, 72.5% of students felt that the number, type, complexity of questions by different examiners could affect their scores. An average of 56.6% of students has indicated that there was no gender, nationality or regional bias influencing their scores. 76.8% and 53.1% reflected that communication skills and dressing skills had an impact on their practical assessment.

Analysis of open-ended questions

The common repetitive feedback response from the students were as tabulated below. The existing pattern of practical examination has been appreciated by the students in many ways despite its subjectivity. The good conduct of the examination, adequate time, uniform & fair assessment was acknowledged by some of the students.

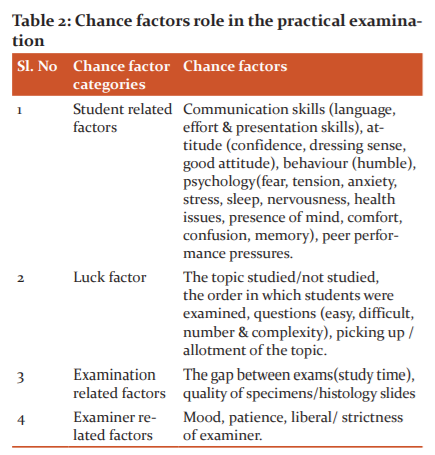

Chance factors and their role

The following were the possible chance factors (Table No 2) that the students felt had a role in the practical examination assessment thereby making the examination system more subjective than being objective.

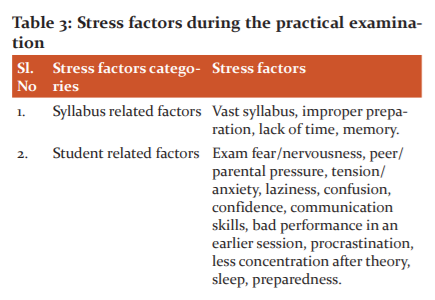

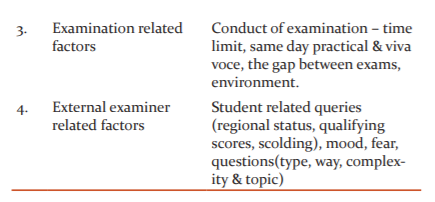

Stress factors and their role

The students have stated the following factors responsible for stress during the practical examinations (Table 3)

DISCUSSION

The feedback analysis hints at a need for the examination to be more objective. The scheduling of the practical examinations should be student-friendly with adequate time for preparations. The pattern of examination should stress uniformity with equal time and similar questions for all students. Emphasis should be made on the good quality of slides & specimens. More importance to be given to formative and continuous assessments than only the final assessment. The practical assessment pattern should be more skill-oriented on a check list basis. Guidelines to be laid down to the examiners to avoid bias of any category. The Competency-Based Medical Curriculum (CBME) aims at solving most of the deficiencies of the traditional practical examination. With the introduction of CBME, the focus in assessment is going to shift from assessment of learning to assessment for learning and formative feedback will be a crucial factor for competency development.4

Objective Structured Practical Examination (OSPE) a better choice as an assessment technique over the Traditional Practical Examination as it improves students’ performance in a laboratory exercise.1 Objective structured practical examination (OSPE) to be a significantly high scoring and preferred method of examination as compared to the Traditional Practical Examination (TDPE) in the assessment of laboratory component and viva voce of Physiology.2 Pass percentage was higher by viva voce but high percentages and distinctions were by OSPE. Viva-voce needs to be continued as it is the only assessment tool that evaluates communication skills, power of explanation, interpretation, and confidence level and retention abilities of students.2 Objective structured practical examination increases the objectivity and reduces subjectivity compared to conventional viva.5

CONCLUSION

The students have felt the traditional practical examination to be fair, uniform, with no bias to a certain extent, yet stressful with chance factors such as luck, humble nature, dressing style, communication skills influencing their scores. It has also been reflected that examiner related factors also influence the student scores. The new competency-based medical curriculum has incorporated alternate methods of evaluation which will provide fair and consistent techniques for evaluating the students. A feedback if taken on the practical component of the competency-based assessment will reflect the advantages/disadvantages over the traditional assessment.

ACKNOWLEDGEMENT: Our sincere thanks to Mrs Radhika, a Statistician, for the guidance in statistical analysis. The authors acknowledge the immense help received from the scholars whose articles are cited and included in references of this manuscript. The authors are also grateful to authors/editors/publishers of all those articles, journals and books from where the literature for this article has been reviewed and discussed.

SOURCE OF FUNDING: Nil

CONFLICT OF INTEREST: Authors declare that they do not have any conflict of interest

References:

-

Nigam R, Mahawar P. Critical Analysis of performance of MBBS students using OSPE & TDPE - A Comparative Study. Natl J Community Med 2011; 2 (3):322-324.

-

Rehman R, Syed S, Iqbal A, Rehan R. Perception and performance of medical students in objective structured practical examination and viva voce. Pak J Physiol 2012;8(2):33- 36.

-

Abraham RR, Raghavendra R, Surekha K, Asha K. A trial of objective structured practical examination at Melaka Manipal Medical College India. Adv Physiol Edu 2009; 33(1):21–23.

-

Lockyer J, Carpaccio C, Chan MK, Hart D, Smee S, Touchie C, et al. Core principles of assessment in competency-based medical education. Med Teach 2017;39(6): 609-616.

-

Sabnis AS, Bhosale Y. Standardization of evaluation tool by comparing spot, unstructured viva with objective structured practical examination in microanatomy. Int J Med Clin Res 2012; 3(7):225-228.

|

This work is licensed under a Creative Commons Attribution-NonCommercial 4.0 International License

This work is licensed under a Creative Commons Attribution-NonCommercial 4.0 International License